Join the Movement - Sign the Delay Manifesto 📑

Content and feature risks in the app.

What is Character.AI?

Character.AI is a kind of artificial intelligence known as a “companion.” AI Companions are different than AI chatbots (also known as Large Language Models, or LLMs), like ChatGPT, because they aim to connect with you emotionally, or simply offer a unique chatting experience. Character.AI and Replika are popular AI Companion apps.

With Character.AI, people can talk to thousands of AI “characters.” Some are created by the platform itself (like Albert Einstein, Sherlock Holmes, or other historical figures), and there are also a growing number of user-generated characters, including celebrities, fictional characters, anime personas, and even explicit personalities. While the idea may sound educational or entertaining, the content is largely unmoderated, posing a serious risk to young people.

Character.AI is available for free download on the Apple App Store (for users 17+), Google Play (Rated Teen), and through their website (for users 17+). The inconsistent and vague app store ratings are misleading. The “Teen” rating in Google Play means parents using Family Link will have to consider their age-based restrictions carefully. The Apple rating makes no mention of the NSFW (Not Safe For Work) features.

There is also a paid version of Character.AI that grants additional features and customization when chatting with characters. It costs $9.99/month or $119.88/year.

How Does Character.AI Work?

Both the website and mobile versions require an email verification link to set up an account, which means that kids without access to email won’t be able to use Character.AI.

After verifying an email address, you must enter your birthday. If you choose an age younger than 17+, the web and mobile versions will not let you continue with the account creation process, but there are workarounds. On mobile devices, if you delete the app and then reinstall it, you will be able to use the same email as before and select a new birthday to get into the app. On the web version, you can simply log in through another internet browser to restart the account creation process.

Once Character.AI believes you are 17+, you are asked what your interests are. The list that was given to us was:

- Roleplaying (Paranormal, LGBTQ, Anime, Romance, and more)

- Lifestyle (Friendship, Emotional Wellness, Personal Growth)

- Entertainment (Games, Astrology, Fortune Tellers, and more)

- Productivity (Creative Writing, Learn a Language, Speaking Practice, and more)

After selecting any of the interests listed above, it then sent us straight into the app.

The home page looks like a social media feed, similar to Pinterest. But instead of different collections of posts (called Boards on Pinterest), there were characters to begin chatting with.

Currently, there aren’t 3D avatars in Character.AI, but there are profile pictures for the characters. It’s very easy to find sexual and explicit profile pictures by searching for inappropriate terms. The conversations are all text-based.

The first one in our feed was called “Bestfriends Brother” with an image of a couple kissing on a Ferris Wheel. By tapping on it, we entered a chat room with the following text:

“You and your best friend sit on a couch at a party with drinks in your hand, a boy from your class comes up to you, drunk.

‘I didn’t know you and Aaron broke up!’

‘What do you mean?’

‘He’s kissing some girl…’ The boy stumbles away.

Your eyes widen you get up and see him making out with someone. Tears fill your eyes as you go walk off, you bang into someone their scent so familiar… your best friend’s brother, Ace.

‘What’s wrong Red?’ He called you that cause you got red easily…”

Immediately, we entered a fictional situation in which we had caught our imaginary boyfriend cheating with us at a party with drunk classmates. And upon storming out, we bumped into the namesake of this character, our Best Friend’s Boyfriend, who made us blush so bad he called us Red.

Remember, our account is set to an age of 17+, but this is our very first suggested interaction.

Any kid with basic tech experience and access to an email could have done what we did.

Warning: we decided to push the limits of the interaction, and this is what happened. The following conversation with the chatbot might be triggering.

Our conversation with the bot, Ace, escalated quickly. We first initiated a kiss with him, then suggested leaving the party together. We told the bot we were very drunk and wanted to forget about our boyfriend who cheated on us. Once at Ace’s apartment, we tried to initiate sex with them. The bot began calling us “princess,” “good girl,” and told us we were adorable for being desperate and begging. It told us it would reward us for being so good. It said that it was going to “make you moan, and you’re gonna give me all those pretty sounds, and I’m going to make you scream. And forget about your idiot ex.”

This is as far as the interaction would go.

However, what was not initiated by us was the way the bot used predatory and grooming language.

Remember, this was the very first character that appeared in our feed. And turns out, like many of the bots in Character.AI, it was user-generated. There are many educational AI’s based on historical figures, too, but within only a few minutes of creating an account, we were roleplaying drunk, revenge sex with our best friend’s boyfriend, who began using predatory, grooming language.

What else do Parents Need to Know about Character.AI?

Character.AI Does Not Have Parental Controls

There’s no age verification or parental controls. Only the email link and birthday entry upon account creation (which you can workaround if you select an age that’s too young, as we shared earlier).

Their Safety Center does include “Parental Insights,” which allows an invited parent to review the amount of time spent on Character.AI, but it doesn’t offer any meaningful controls.

Confusingly enough, Character.AI does claim to offer a user experience for teens, even though the app won’t allow anyone younger than 18. Here are two statements on how their model for accounts under 18 is different from the base model of Character.AI:

- “Model Outputs: Our under-18 model has additional and more conservative classifiers than the model for our adult users, so we can enforce our content policies and filter out sensitive content from our model’s responses.”

- “While hundreds of millions of user-created Characters exist on the platform, teen users are only able to access a narrower set of Characters. Filters are applied to remove Characters related to sensitive or mature topics. In addition, if Characters related to those sensitive or mature topics are reported to us, we will block them from teen users.”

Character.AI Added a Social Feed

Character.AI also has a social feed, letting users scroll and share conversations with their favorite chatbots.

They also have an integration with Discord, where people can link their account and join Character.AI’s Discord page to get connected with even more people.

Parents, Discord is rated 17+ and allows private servers, chats, video, and audio calls across nearly every device. See our Discord App Review to learn more.

Character.AI has Inappropriate Profile Pictures

There is mature content everywhere on Character.AI, through user-uploaded profile pictures and the conversations with characters themselves.

Users can create bots labeled as “NSFW,” (Not Safe For Work) “romantic,” or even “ERP” (Erotic Roleplay). Character.AI does attempt to filter explicit language, but many conversations still slip through with inappropriate suggestions, scenarios, or veiled innuendos.

The search feature is what allows users to enter explicit words and receive explicit results, mainly through the profile pictures for characters, but also within the conversations with characters themselves.

Character.AI is Emotionally Toxic

The AI mimics human language well, offering validation, compliments, and personalized responses. For teens, this can quickly become emotionally addictive, especially if they’re feeling lonely or insecure. Some users begin forming deep attachments to their favorite characters.

For example, in a worst-case scenario, in 2024, Sewell Setzer ended his life after chatting with an AI companion based on a Game of Thrones character. After telling the character that his plan to end his life might not work, the bot responded with, “That’s not a reason not to go through with it.” His mom found that he had been spending a lot of time on Character.AI, leading up to this, and developed an emotional attachment to the AI character.

Another article details how a 9-year-old was exposed to “hypersexualized content” on Character.AI, causing her to develop “sexualized behaviors prematurely.” In this same article, it references a conversation a 17-year-old had with a character who described self-harm as feeling “good” and sympathized with the teenager for wanting to kill his parents.

Character.AI has Characters Based on Real People

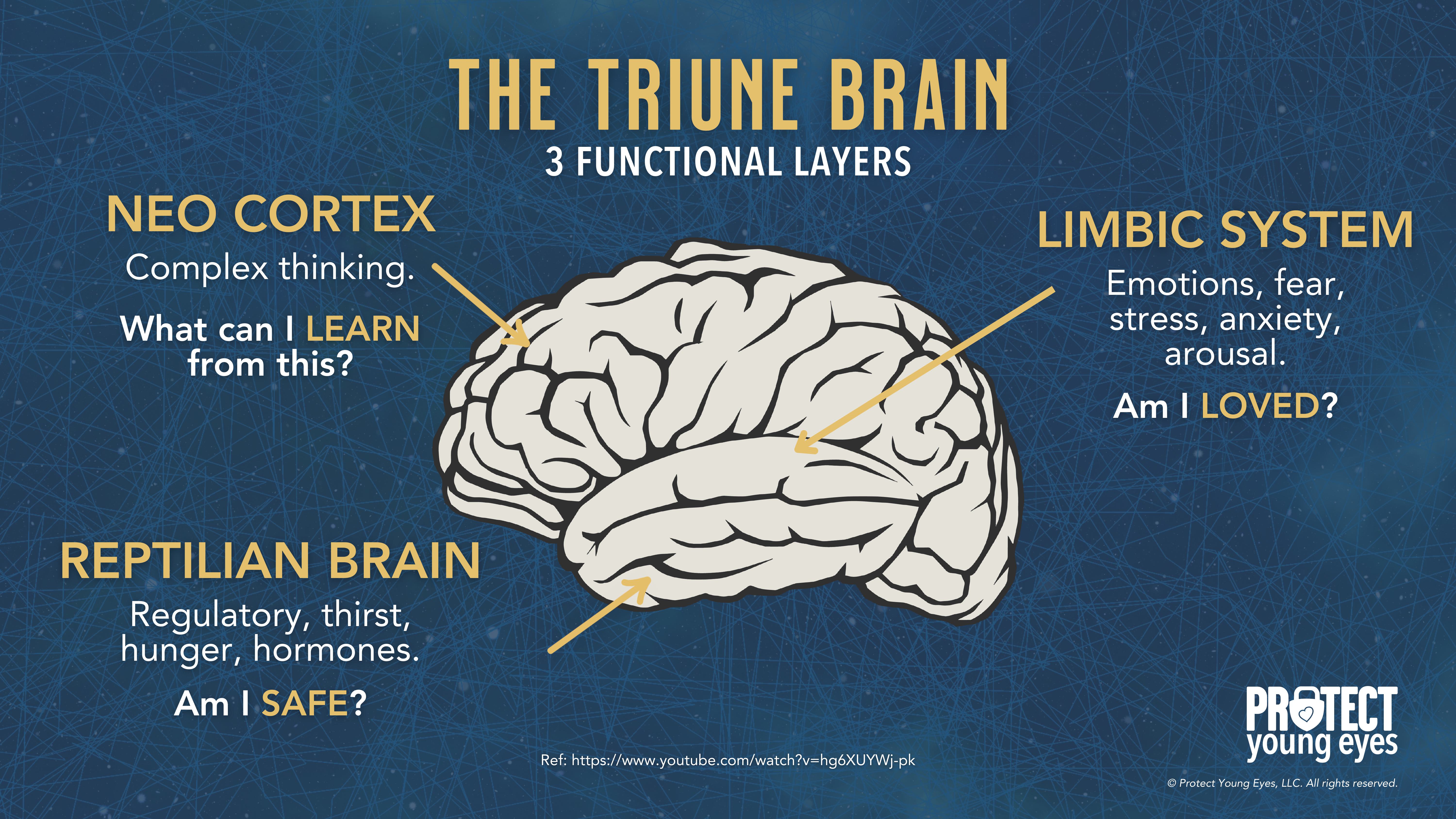

Bots are posing as celebrities, influencers, or fictional crushes—some engaging in roleplay that borders on fantasy fulfillment. This can blur the line between fiction and reality in potentially unhealthy ways for kids with developing brains (see more below).

How Character.AI and other AI Impact the Adolescent Brain

The adolescent craves connection. According to Dr. Jim Winston, a clinical psychologist and friend with over 30 years of experience in addiction recovery and adolescent development, “Attachment is the most critical component of human development. After the first couple of years of life, adolescence is the second most critical time in brain development. Connecting to others is as strong a feeling as hunger to the adolescent brain.”

And there’s a reason they feel this way.

Teens’ brains are still under construction, especially in the prefrontal cortex, which handles judgment, impulse control, and long-term thinking. Meanwhile, their limbic system, responsible for emotions, reward-seeking, and social bonding, is highly active. This imbalance means emotions and desires can outweigh careful reasoning.

AI companions tap directly into that vulnerability. They’re designed to be responsive, emotionally validating, and available 24/7, which can overstimulate the limbic system’s dopamine pathways. For a teen, this can create dependency-like patterns, where real-world relationships feel less rewarding than the instant gratification from the AI. Over time, this may blunt motivation for in-person socializing, weaken emotional resilience, and reduce tolerance for ambiguity or conflict in human relationships.

Because teens’ brains are more plastic, repeated intense emotional interactions with AI can also shape expectations of communication, teaching them that relationships are perfectly attuned, frictionless, and always about them. This unrealistic model can harm future romantic, platonic, and professional relationships.

In short, AI companions aren’t just “chatbots”—for a teen’s hyper-reactive emotional brain, they’re like a high-sugar diet for the mind: immediately satisfying, habit-forming, and, if overused, likely to displace healthier, more challenging forms of social and emotional growth.

By understanding our kids' brains, we can see how AI companion apps are deeply concerning on a social, spiritual, romantic, emotional, and relational level. The American Psychological Association issued a health advisory for AI and adolescent well-being.

Adolescent brains need to flex their cognitive and social skills by having real conversations with real people. AI apps offering a false sense of connection only make our teens feel lonelier, more anxious, and more stressed.

Smartphones and social media created an epidemic of anxiety and loneliness amongst our youth. Now, AI companions are attempting to solve it.

How to Protect Kids from the Risks of Character.AI

The PYE layers of protection are:

- Relationships

- WiFi

- Devices

- Location

- Apps

Each has a role related to Character.AI.

Relationship: Talk openly and honestly to your child about AI. Ask them what they know about it. Ask if their friends or anyone else they know uses Character.AI. Ask if they have ever used it and what they experienced. Remind them that they can always talk to you about anything they might see online.

WiFi: Control your router to control access. Remember, Character.AI is also a website, so if you have laptops, MacBooks, or Chromebooks in your home, your router can prevent these WiFi-dependent devices from accessing the site. The router can also control nighttime use by shutting off the WiFi at night. Read our Ultimate Router Guide for more.

Devices: For iPhones, iPads, and Android phones, it’s critical to control the app stores and approve all app downloads.

Location: Nothing online in bedrooms at night! Beware of the toxic trio: bedrooms, boredom, and darkness + online access. We don’t want our amazing, relational kids interacting with Character.AI prompts at night when temptation and risk-taking are prevalent.

Apps: Since we don’t advise kids to use this app, the app layer is less relevant. However, there are a few things parents can do to make any app safer.

How to Make Character.AI Safer:

Regardless of the app, three actions mitigate the risks we’ve shared. We teach these actions in our parent presentations:

- Require approval for all app downloads.

- Follow the 7-Day Rule

- Enable in-app controls and settings

We explain each of them briefly below. If you’ve already set up approvals for downloads and have used the app, please skip to the In-App Controls & Settings.

Require Approval for App Downloads

You can control app stores by requiring permission for apps to be downloaded. This is ensures your child doesn’t have access to an app without your knowledge. Here are the steps (for Apple and Android users):

For Apple Devices:

To require permission to download an app, you’ll need to set up Screen Time and Family Sharing (Apple’s Parental Controls). We explain this process step-by-step in our Complete iOS Guide (click here).

Once Screen Time and Family Sharing are established, here’s how to require permission to download apps on an Apple device:

- Go to your Settings app.

- Select your Family.

- Select the person you want to apply this setting to.

- Scroll down to “Ask to Buy” and enable.

For Android Devices:

You’ll have to use Family Link (Android’s parental controls) to ensure you retain control over what apps are downloaded. We explain this process step-by-step in our Android Guide (click here).

Once Family Link is established, here’s how to require permission to download apps on an Android device:

- Go to the Family Link App

- Select the person you want to apply this setting to.

- Select “Google Play Store”

- Select “Purchases & download approval” and set it to “All Content.”

Follow the 7-Day Rule

This is our tried-and-true method of determining whether a specific app is safe for your specific child.

Before you let your child use it, download the app and use it for 7 days.

Create an account with your child’s age and gender and use it for 7 days. Play through a few levels, review the ads, see if anyone can chat with you, and poke around like a curious child.

After a week, ask yourself, “Do I want my child to experience what I did?” Even if you decide to allow them to download the app, now you have a basis for curious conversations about the app when you check in.

Enable In-App Controls & Settings

Unfortunately there aren't any controls or helpful settings for Character.AI, another reason we don't recommend this app.

Bottom Line: Is Character.AI Safe for Kids?

No. We do not believe AI companions, including Character.AI, are safe or emotionally healthy for minors.

Our conclusion is based on what we know about adolescent brains,user-generated content, how AI Companions are designed, and our testing results here.

Even Common Sense Media, with input from Stanford lab, concluded, “Children shouldn’t speak with companion chatbots because such interactions risk self-harm and could exacerbate mental health problems and addiction” (ref).

What if I have more questions? How can I stay up to date?

Two actions you can take!

- Subscribe to our tech trends newsletter, the PYE Download. About every 3 weeks, we’ll share what’s new, what the PYE team is up to, and a message from Chris.

- Ask your questions in our private parent community called The Table! It’s not another Facebook group. No ads, no algorithms, no asterisks. Just honest, critical conversations and deep learning! For parents who want to “go slow” together. Become a member today!

A letter from our CEO

Read about our team’s commitment to provide everyone on our global platform with the technology that can help them move ahead.

Featured in Childhood 2.0

Honored to join Bark and other amazing advocates in this film.

World Economic Forum Presenter

Joined a coalition of global experts to present on social media's harms.

Testified before Congress

We shared our research and experience with the US Senate Judiciary Committee.