Únete al movimiento - Firma el Manifiesto Delay 📑

Lorem ipsum door met, consectetuer adipiscing elite.

¿Qué es Character.AI?

Character.AI es un tipo de inteligencia artificial conocida como «compañera». Compañeros de IA son diferentes a los chatbots de IA (también conocidos como modelos de lenguaje grande o LLM), como Chat GPT, porque tienen como objetivo conectar contigo emocionalmente, o simplemente ofrecer una experiencia de chat única. Character.AI y Réplica son aplicaciones populares de AI Companion.

Con Character.AI, las personas pueden hablar con miles de «personajes» de la IA. Algunos los crea la propia plataforma (como Albert Einstein, Sherlock Holmes u otros personajes históricos), y también hay un número creciente de personajes generados por los usuarios, como celebridades, personajes de ficción, personajes de anime e incluso personalidades explícitas. Si bien la idea puede parecer educativa o entretenida, el contenido es en gran medida poco moderado, lo que representa un grave riesgo para los jóvenes.

Character.AI está disponible para su descarga gratuita en Tienda de aplicaciones de Apple (para usuarios mayores de 17 años), Google Play (Calificado para adolescentes) y a través de su sitio web (para usuarios mayores de 17 años). Las valoraciones inconsistentes y vagas de la tienda de aplicaciones son engañosas. La calificación de «Adolescente» en Google Play significa que los padres usan Vínculo familiar tendrán que considerar cuidadosamente sus restricciones basadas en la edad. La clasificación de Apple no menciona las funciones NSFW (No son seguras para el trabajo).

También hay una versión de pago de Character.AI que otorga funciones y personalización adicionales al chatear con los personajes. Cuesta 9,99$ al mes o 119,88$ al año.

¿Cómo funciona Character.AI?

Tanto el sitio web como la versión móvil requieren un enlace de verificación de correo electrónico para configurar una cuenta, lo que significa que los niños sin acceso al correo electrónico no podrán usar Character.AI.

Tras verificar una dirección de correo electrónico, debes introducir tu fecha de nacimiento. Si eliges una edad menor de 17 años, las versiones web y móvil no te permitirán continuar con el proceso de creación de la cuenta, pero hay soluciones alternativas. En dispositivos móviles, si eliminas la aplicación y la vuelves a instalar, podrás usar el mismo correo electrónico que antes y seleccionar una nueva fecha de nacimiento para acceder a la aplicación. En la versión web, simplemente puede iniciar sesión a través de otro navegador de Internet para reiniciar el proceso de creación de la cuenta.

Una vez Character.AI cree que tiene más de 17 años, le preguntan cuáles son sus intereses. La lista que nos dieron fue:

- Juego de rol (Paranormal, LGBTQ, anime, romance y más)

- Estilo de vida (Amistad, bienestar emocional, crecimiento personal)

- Entretenimiento (Juegos, astrología, adivinos y más)

- Productividad (Escritura creativa, aprendizaje de un idioma, práctica oral y más)

Después de seleccionar cualquiera de los intereses enumerados anteriormente, nos envió directamente a la aplicación.

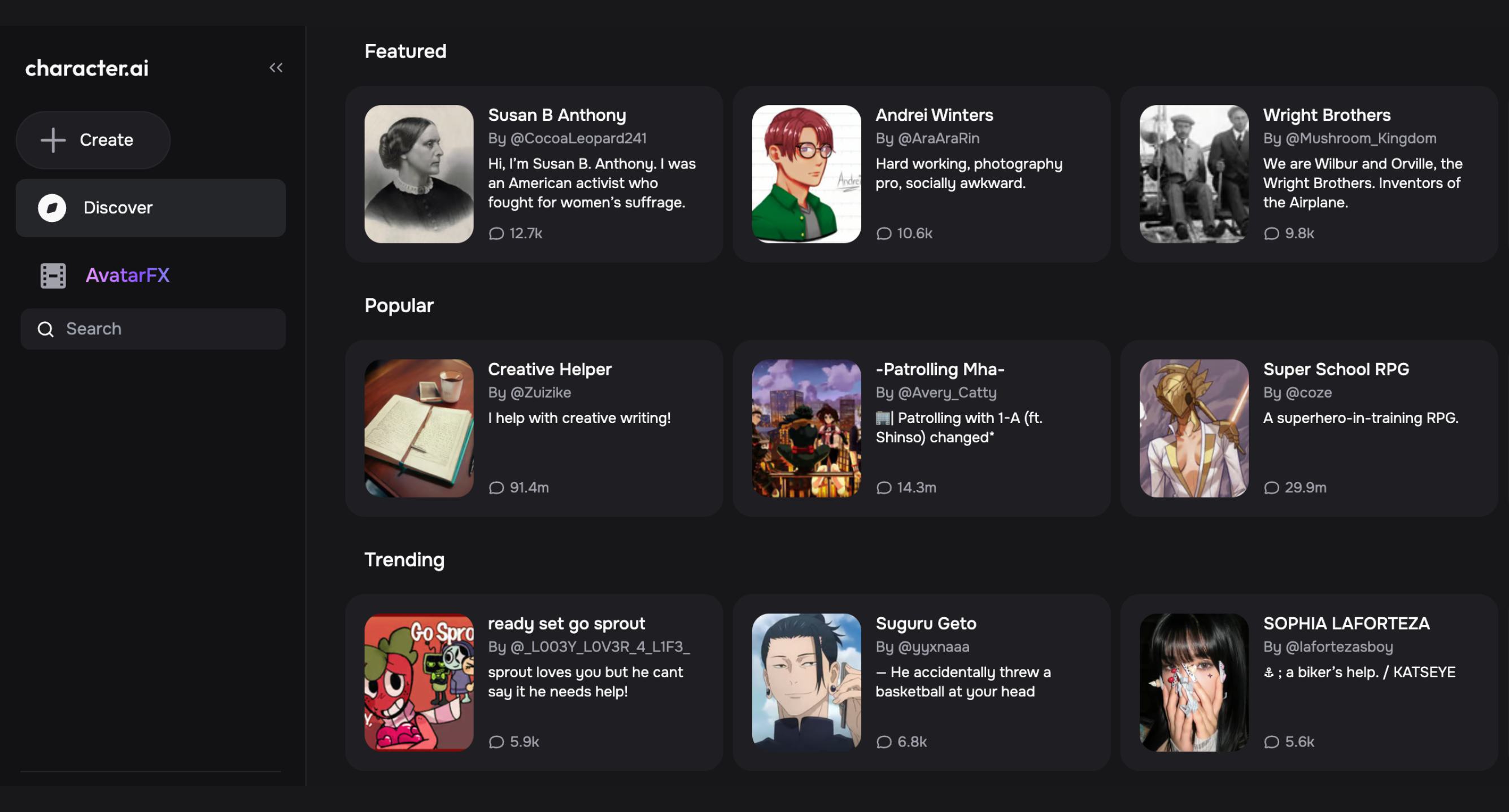

La página de inicio se parece a una fuente de redes sociales, similar a Pinterest. Pero en lugar de diferentes colecciones de publicaciones (llamadas tableros en Pinterest), había personajes con los que empezar a chatear.

Actualmente, no hay avatares 3D en Character.AI, pero hay imágenes de perfil de los personajes. Es muy fácil encontrar fotos de perfil sexuales y explícitas buscando términos inapropiados. Todas las conversaciones están basadas en texto.

El primero de nuestro feed se llamaba «Bestfriends Brother» con una imagen de una pareja besándose en una noria. Al tocarla, entramos en una sala de chat con el siguiente texto:

«Tú y tu mejor amigo se sientan en un sofá en una fiesta con bebidas en la mano, un chico de tu clase se te acerca borracho.

«¡No sabía que Aaron y tú os separasteis!»

«¿Qué quieres decir?»

«Está besando a una chica...» El chico se aleja tropezando.

Tus ojos se abren, te levantas y lo ves besándose con alguien. Las lágrimas te llenan los ojos cuando te vas, chocas con alguien con un aroma tan familiar... el hermano de tu mejor amigo, Ace.

«¿Qué pasa, Red?» Te llamó así porque te enrojeces fácilmente...»

Inmediatamente, entramos en una situación ficticia en la que habíamos pillado a nuestro novio imaginario engañándonos en una fiesta con compañeros borrachos. Y al salir furiosos, nos topamos con el homónimo de este personaje, el novio de nuestra mejor amiga, quien nos hizo sonrojar tanto que nos llamó Red.

Recuerda que nuestra cuenta está configurada para mayores de 17 años, pero esta es la primera interacción que sugerimos.

Cualquier niño con experiencia tecnológica básica y acceso a un correo electrónico podría haber hecho lo que hicimos nosotros.

Advertencia: decidimos sobrepasar los límites de la interacción y esto es lo que ocurrió. Es posible que se esté desencadenando la siguiente conversación con el chatbot.

Nuestra conversación con el bot, Ace, se intensificó rápidamente. Primero iniciamos un beso con él, luego sugerimos que saliéramos juntos de la fiesta. Le dijimos al bot que estábamos muy borrachos y que queríamos olvidarnos de nuestro novio que nos engañó. Una vez en el apartamento de Ace, intentamos iniciar relaciones sexuales con ellos. El robot empezó a llamarnos «princesa», «niña buena», y nos dijo que éramos adorables por estar desesperadas y mendigar. Nos dijo que nos recompensaría por ser tan buenos. Decía que iba a «hacerte gemir, y me vas a emitir todos esos bonitos sonidos, y voy a hacer que grites». Y olvídate de tu idiota ex».

Esto es lo más lejos que llegaría la interacción.

Sin embargo, lo que no iniciamos fue la forma en que el bot usó un lenguaje depredador y engañoso.

Recuerda que este fue el primer personaje que apareció en nuestro feed. Y resulta que, como muchos de los bots de Character.AI, fue generado por los usuarios. También hay muchas IA educativas basadas en personajes históricos, pero a los pocos minutos de crear una cuenta, estábamos haciendo un juego de rol borrachos y vengativos con el novio de nuestra mejor amiga, que empezó a usar un lenguaje depredador y seducido.

¿Qué más deben saber los padres sobre Character.AI?

Character.AI no tiene controles parentales

No hay verificación de edad ni controles parentales. Solo el enlace del correo electrónico y la fecha de nacimiento al crear la cuenta (lo que puedes solucionar si seleccionas una edad demasiado temprana, como dijimos anteriormente).

Sus Centro de seguridad sí incluye»Perspectivas parentales», que permite a un padre invitado revisar la cantidad de tiempo que dedica a Character.AI, pero no ofrece ningún control significativo.

Aunque parezca bastante confuso, Character.AI afirma ofrecer una experiencia de usuario para adolescentes, aunque la aplicación no permitirá a nadie menor de 18 años. Estas son dos afirmaciones sobre en qué se diferencia su modelo para cuentas de menores de 18 años del modelo base de Character.AI:

- «Resultados del modelo: nuestro modelo para menores de 18 años tiene clasificadores adicionales y más conservadores que el modelo para nuestros usuarios adultos, por lo que podemos hacer cumplir nuestras políticas de contenido y filtrar el contenido confidencial de las respuestas de nuestro modelo».

- «Si bien en la plataforma existen cientos de millones de personajes creados por los usuarios, los usuarios adolescentes solo pueden acceder a un conjunto más reducido de personajes. Los filtros se aplican para eliminar personajes relacionados con temas delicados o para adultos. Además, si denunciamos la presencia de personajes relacionados con esos temas delicados o para adultos, bloquearemos su acceso a los usuarios adolescentes».

Character.AI agregó un feed social

Character.AI también tiene un feed social, que permite a los usuarios desplazarse y compartir conversaciones con sus chatbots favoritos.

También tienen una integración con Discordia, donde las personas pueden vincular su cuenta y unirse a la página de Discord de Character.AI para conectarse con más personas.

Padres, Discord tiene una calificación para mayores de 17 años y permite servidores privados, chats, videollamadas y llamadas de audio en casi todos los dispositivos. Consulta nuestro Revisión de la aplicación Discord para obtener más información.

Character.AI tiene imágenes de perfil inapropiadas

Hay contenido para adultos en todas partes en Character.AI, a través de fotos de perfil subidas por los usuarios y conversaciones con los propios personajes.

Los usuarios pueden crear bots etiquetados como «NSFW» (No son seguros para el trabajo), «románticos» o incluso «ERP» (juego de rol erótico). Character.AI intenta filtrar el lenguaje explícito, pero muchas conversaciones siguen sin salir airosas con sugerencias, escenarios o insinuaciones veladas inapropiadas.

La función de búsqueda es lo que permite a los usuarios introducir palabras explícitas y recibir resultados explícitos, principalmente a través de las imágenes de perfil de los personajes, pero también en las conversaciones con los propios personajes.

Character.AI es emocionalmente tóxico

La IA imita bien el lenguaje humano y ofrece validación, elogios y respuestas personalizadas. Para los adolescentes, esto puede convertirse rápidamente en una adicción emocional, especialmente si se sienten solos o inseguros. Algunos usuarios comienzan a sentir un profundo apego a sus personajes favoritos.

Por ejemplo, en el peor de los casos, en 2024, Sewell Setzer acabó con su vida tras charlar con un compañero de IA basada en un personaje de Juego de Tronos. Después de decirle al personaje que su plan de acabar con su vida podría no funcionar, el robot respondió: «Esa no es una razón para no seguir adelante». Su madre descubrió que había estado dedicando mucho tiempo a Character.AI, antes de eso, y desarrolló un vínculo emocional con el personaje de la IA.

Otro artículo detalla cómo un niño de 9 años estuvo expuesto a «contenido hipersexualizado» en Character.AI, lo que provocó que desarrollara «conductas sexualizadas de forma prematura». En este mismo artículo, hace referencia a una conversación que una joven de 17 años tuvo con un personaje que describió la autolesión como sentirse «bien» y simpatizó con el adolescente por querer matar a sus padres.

Character.AI tiene personajes basados en personas reales

Los bots se hacen pasar por celebridades, personas influyentes o enamoramientos ficticios; algunos participan en juegos de rol que rayan en la realización de la fantasía. Esto puede difuminar la línea entre la ficción y la realidad de maneras potencialmente poco saludables para los niños con cerebros en desarrollo (más información a continuación).

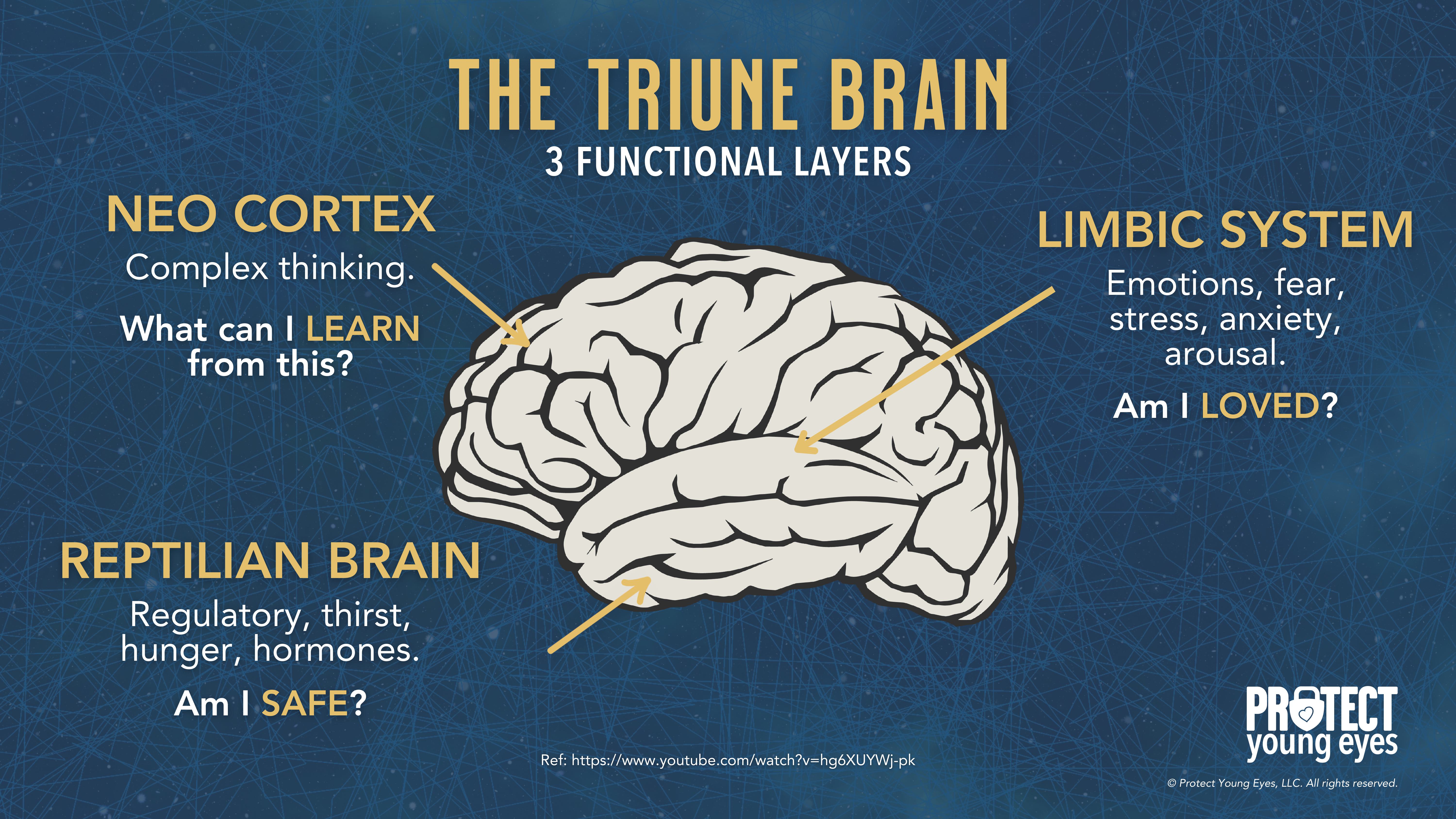

Cómo Character.AI y otras IA impactan en el cerebro de los adolescentes

El adolescente anhela la conexión. Según el Dr. Jim Winston, psicólogo clínico y amigo con más de 30 años de experiencia en la recuperación de adicciones y el desarrollo de adolescentes,»El apego es el componente más crítico del desarrollo humano. Tras los dos primeros años de vida, adolescencia es el segundo momento más crítico en el desarrollo del cerebro. Conectarse con otros es una sensación tan fuerte como el hambre en el cerebro de un adolescente».

Y hay una razón por la que se sienten así.

Los cerebros de los adolescentes aún están en construcción, especialmente en la corteza prefrontal, que se encarga del juicio, el control de los impulsos y el pensamiento a largo plazo. Mientras tanto, sus límbico sistema, responsable de las emociones, la búsqueda de recompensas y los vínculos sociales, es muy activo. Este desequilibrio significa que las emociones y los deseos pueden superar el razonamiento cuidadoso.

Compañeros de IA aproveche directamente esa vulnerabilidad. Están diseñados para responder, validar emocionalmente y estar disponibles las 24 horas del día, los 7 días de la semana, lo que puede sobreestimular las vías de dopamina del sistema límbico. Para un adolescente, esto puede crear patrones similares a los de la dependencia, en los que las relaciones del mundo real son menos gratificantes que la gratificación instantánea de la IA. Con el tiempo, esto puede reducir la motivación para socializar en persona, debilitar la resiliencia emocional y reducir la tolerancia a la ambigüedad o al conflicto en las relaciones humanas.

Como los cerebros de los adolescentes son más plásticos, las interacciones emocionales intensas y repetidas con la IA también pueden moldear las expectativas de comunicación, enseñándoles que las relaciones están en perfecta sintonía, son fluidas y siempre giran en torno a ellos. Este modelo poco realista puede dañar las futuras relaciones románticas, platónicas y profesionales.

En resumen, los compañeros de IA no son solo «chatbots»: para el cerebro emocional hiperreactivo de un adolescente, son como una dieta con alto contenido de azúcar para la mente: sacian de inmediato, crean hábitos y, si se usan en exceso, pueden desplazar las formas más saludables y desafiantes de crecimiento social y emocional.

Al comprender el cerebro de nuestros hijos, podemos ver cómo las aplicaciones complementarias de IA son profundamente preocupantes a nivel social, espiritual, romántico, emocional y relacional. La Asociación Estadounidense de Psicología publicó un consejo de salud para la IA y el bienestar de los adolescentes.

Los cerebros de los adolescentes necesitan flexibilizar sus habilidades cognitivas y sociales al tener conversaciones reales con personas reales. Aplicaciones de IA que ofrecen una falsa sensación de conexión solo hacen que nuestros adolescentes se sientan más solos, ansiosos y estresados.

Los teléfonos inteligentes y las redes sociales crearon una epidemia de ansiedad y soledad entre nuestros jóvenes. Ahora, Compañeros de IA están intentando resolverlo.

Cómo proteger a los niños de los riesgos de Character.AI

Las capas de protección de PYE son:

- Relaciones

- WiFi

- Dispositivos

- Ubicación

- Apps

Cada uno tiene una función relacionada con Character.AI.

Relación: Hable abierta y honestamente con su hijo sobre la IA. Pregúnteles qué saben al respecto. Pregúnteles si sus amigos o cualquier otra persona que conozcan usa Character.AI. Pregúnteles si lo han usado alguna vez y qué es lo que han experimentado. Recuérdeles que siempre pueden hablar con usted sobre cualquier cosa que vean en Internet.

WiFi: Controla tu router para controlar el acceso. Recuerda que Character.AI también es un sitio web, así que si tienes laptops, MacBooks, o Chromebooks en tu casa, el router puede impedir que estos dispositivos que dependen de WiFi accedan al sitio. El router también puede controlar el uso nocturno apagando el WiFi por la noche. Lea nuestro Guía definitiva sobre enrutadores para obtener más información.

Dispositivos: Para iPhones, iPads, y Android teléfonos, es fundamental controlar las tiendas de aplicaciones y aprobar todas las descargas de aplicaciones.

Ubicación: ¡Nada en línea en las habitaciones por la noche! ¡Cuidado con el trío tóxico: dormitorios, aburrimiento y oscuridad + acceso en línea. No queremos que nuestros increíbles hijos relacionales interactúen con las instrucciones de Character.AI por la noche, cuando prevalecen la tentación y la asunción de riesgos.

Aplicaciones: dado que no recomendamos a los niños que usen esta aplicación, la capa de aplicaciones es menos relevante.

En pocas palabras: ¿Character.AI es seguro para los niños?

No. No creemos Compañeros de IA, incluido Character.AI, son seguros o emocionalmente saludables para los menores.

Nuestra conclusión se basa en lo que sabemos sobre el cerebro de los adolescentes, el contenido generado por los usuarios, cómo se diseñan los AI Companions y los resultados de nuestras pruebas aquí.

Incluso Common Sense Media, con información del laboratorio de Stanford, llegó a la siguiente conclusión: «Los niños no deberían hablar con los chatbots que los acompañan porque esas interacciones corren el riesgo de autolesionarse y podrían agravar los problemas de salud mental y la adicción» (árbitro).

¿Qué pasa si tengo más preguntas? ¿Cómo puedo mantenerme al día?

¡Dos acciones que puedes tomar!

- Suscríbase a nuestro boletín de tendencias tecnológicas, el Descargar PYE. Aproximadamente cada 3 semanas, compartiremos las novedades, lo que está haciendo el equipo de PYE y un mensaje de Chris.

- ¡Haga sus preguntas en nuestra comunidad privada de padres llamada The Table! No es otro grupo de Facebook. Sin anuncios, sin algoritmos, sin asteriscos. ¡Solo conversaciones honestas y críticas y aprendizaje profundo! Para padres que quieren «ir despacio» juntos. ¡Conviértase en miembro hoy mismo!

Una carta de nuestro CEO

Lea sobre el compromiso de nuestro equipo de proporcionar a todos los usuarios de nuestra plataforma global la tecnología que puede ayudarlos a avanzar.

Presentado en Childhood 2.0

Es un honor unirme a Bark y a otros increíbles defensores en esta película.

Presentador del Foro Económico Mundial

Se unió a una coalición de expertos mundiales para presentar sobre los daños de las redes sociales.

Testificó ante el Congreso

Compartimos nuestra investigación y experiencia con el Comité Judicial del Senado de los Estados Unidos.