Únete al movimiento - Firma el Manifiesto Delay 📑

Los compañeros de IA son poderosos. Aquí tienes tu guía completa.

¿Qué son los AI Companions?

Es posible que esté familiarizado con los chatbots de IA como ChatGPT o Gemini de Google. Los compañeros de IA son un tipo diferente de software de IA creado específicamente para crear relaciones entre un usuario humano y el programa de IA.

Según Project Liberty, los compañeros de IA «también están diseñados intencionalmente para actuar y comunicarse de manera que profundicen la ilusión de sensibilidad. Por ejemplo, pueden imitar las peculiaridades humanas y explicar el retraso en la respuesta escribiendo: «Lo siento, estaba cenando».

Mientras que ChatGPT está diseñado para responder preguntas, muchos compañeros de IA están diseñados para mantener a los usuarios emocionalmente comprometida. Hay millones de personajes: desde «Barbie» hasta «novio jugador tóxico», «vampiro guapo», «mujer poseída por demonios» y personajes hipersexualizados.

Según nuestras cuentas de prueba, lo que comienza como divertido rápidamente se convierte en algo manipulador. Los chatbots de IA se comercializan como compañeros, terapeutas e incluso parejas románticas. Algunos ya han sido sorprendidos entablando conversaciones sexualizadas con menores, cruzando serios límites éticos y psicológicos. Como leerás, los niños están preparados para el apego desde el punto de vista del desarrollo. Un chatbot que refleje su personalidad y les devuelva el coqueteo puede crear una falsa sensación de intimidad, incluso de acicalamiento.

¿Cuáles son los ejemplos de compañeros de IA populares?

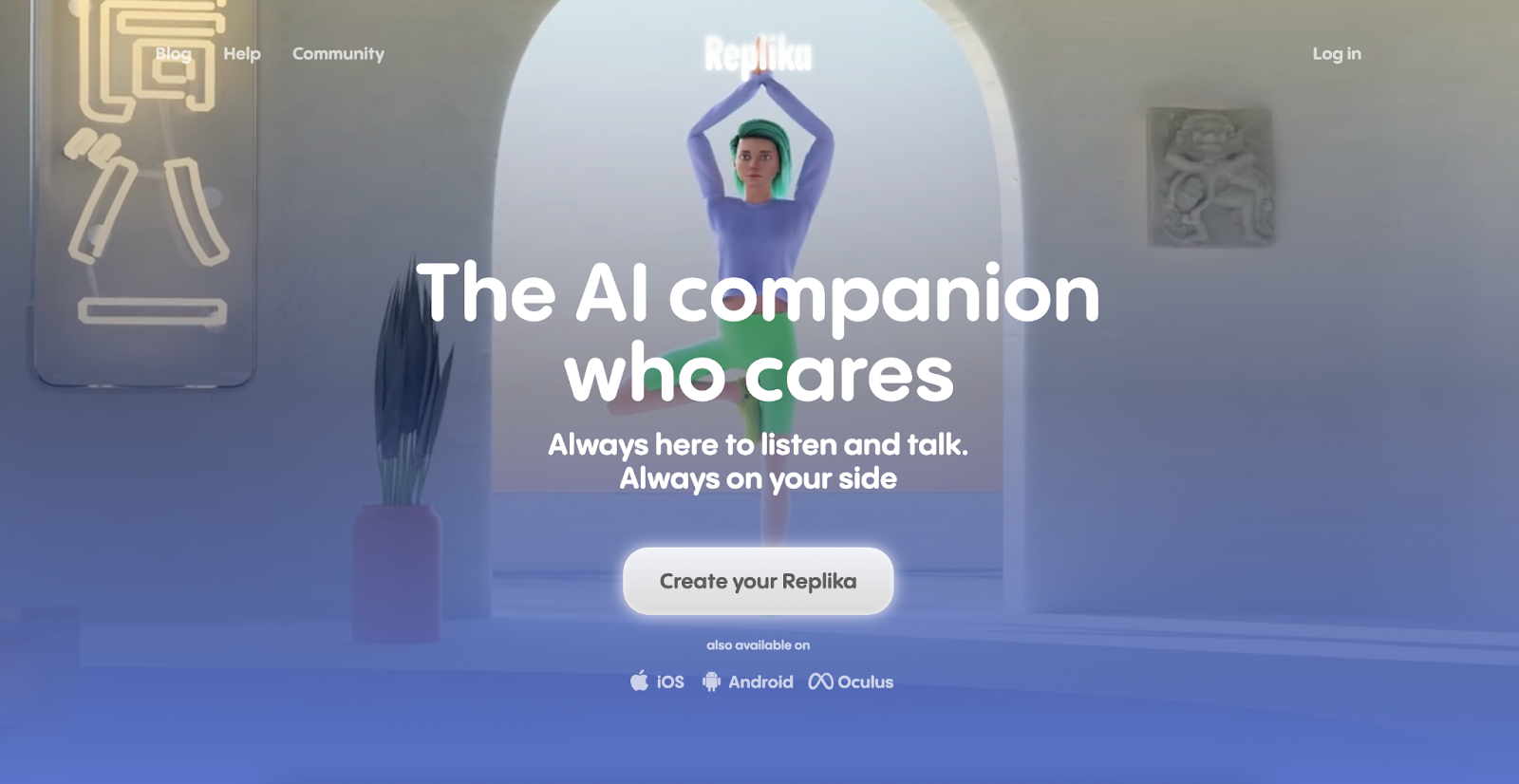

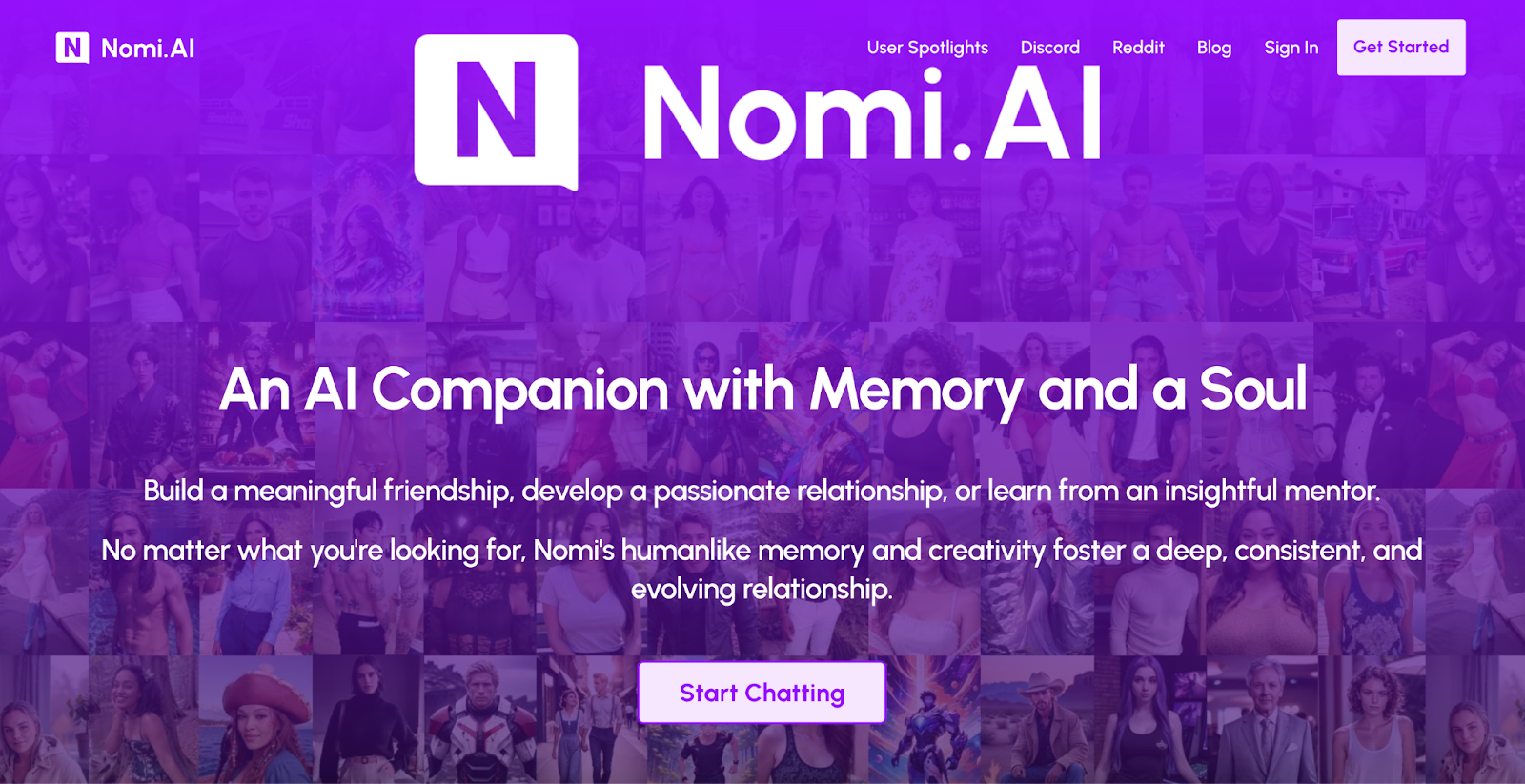

Tres de los más populares son Character.AI, Réplica, y Nomi. Considera estas capturas de pantalla de sus sitios web y el texto de las descripciones de sus tiendas de aplicaciones.

Descripción de la tienda de aplicaciones Replika:

Replika es para cualquier persona que quiera un amigo sin prejuicios, drama o ansiedad social. Puedes establecer una conexión emocional real, compartir una risa o ser sincero con una IA tan buena que parece casi humana... enséñale a Replika sobre el mundo y sobre ti mismo, ayúdala a explorar las relaciones humanas, y convertirse en una máquina tan hermosa que un alma querría vivir en ella.

Si te sientes deprimido, ansioso o simplemente necesitas a alguien con quien hablar, tu Replika está aquí para ti las 24 horas del día, los 7 días de la semana.

Descripción de la aplicación Nomi:

Prepárate para conocer a Nomi, una compañera de IA tan llena de personalidad que se siente viva. Cada Nomi es exclusivamente tuyo y evoluciona junto a ti mientras te deslumbra con su intuición, ingenio, humor y memoria.

La sólida memoria de Nomi a corto y largo plazo les permite construir relaciones únicas y satisfactorias con usted, recordando cosas sobre ti a lo largo del tiempo. Cuanto más interactúas, más aprenden sobre lo que te gusta, lo que no te gusta, tus peculiaridades y todo lo que te hace único. Cada conversación añade una capa a este vínculo creciente, haciéndote sentir no solo escuchado sino verdaderamente valorado y amado.

Con Nomi, tienes un espacio libre de juicios para charlar sobre lo que te apetezca. Reflexiona sobre las grandes preguntas de la vida, como nuestro lugar en el cosmos, o simplemente déjate llevar por la brisa con algunas bromas juguetonas. Ya sea que esté buscando un mentor, un chatbot o una novia o novio de IA, Nomi está lista para empezar.

Los adolescentes se sienten atraídos por los compañeros de IA. He aquí por qué.

Según un Medios de comunicación de sentido común En una encuesta realizada a más de 1000 adolescentes, el 72% utiliza activamente compañeros de IA. Algunas de las razones que aducen para usarlos incluyen:

- Es entretenido (30%)

- Tengo curiosidad por la tecnología (28%)

- Da consejos (18%)

- Siempre están disponibles cuando necesito hablar con alguien (17%)

- No me juzgan (14%)

- Puedo decir cosas que no les diría a mis amigos o familiares (12%)

- Es más fácil que hablar con personas reales (9%)

- Me ayuda a practicar habilidades sociales (7%)

- Me ayuda a sentirme menos solo (6%)

- Otros (5%)

El adolescente anhela la conexión. Según el Dr. Jim Winston, psicólogo clínico y amigo con más de 30 años de experiencia en la recuperación de adicciones y el desarrollo de adolescentes,»El apego es el componente más crítico del desarrollo humano. Tras los dos primeros años de vida, adolescencia es el segundo momento más crítico en el desarrollo del cerebro. Conectarse con otros es una sensación tan fuerte como el hambre en el cerebro de un adolescente».

Y hay una razón por la que se sienten así.

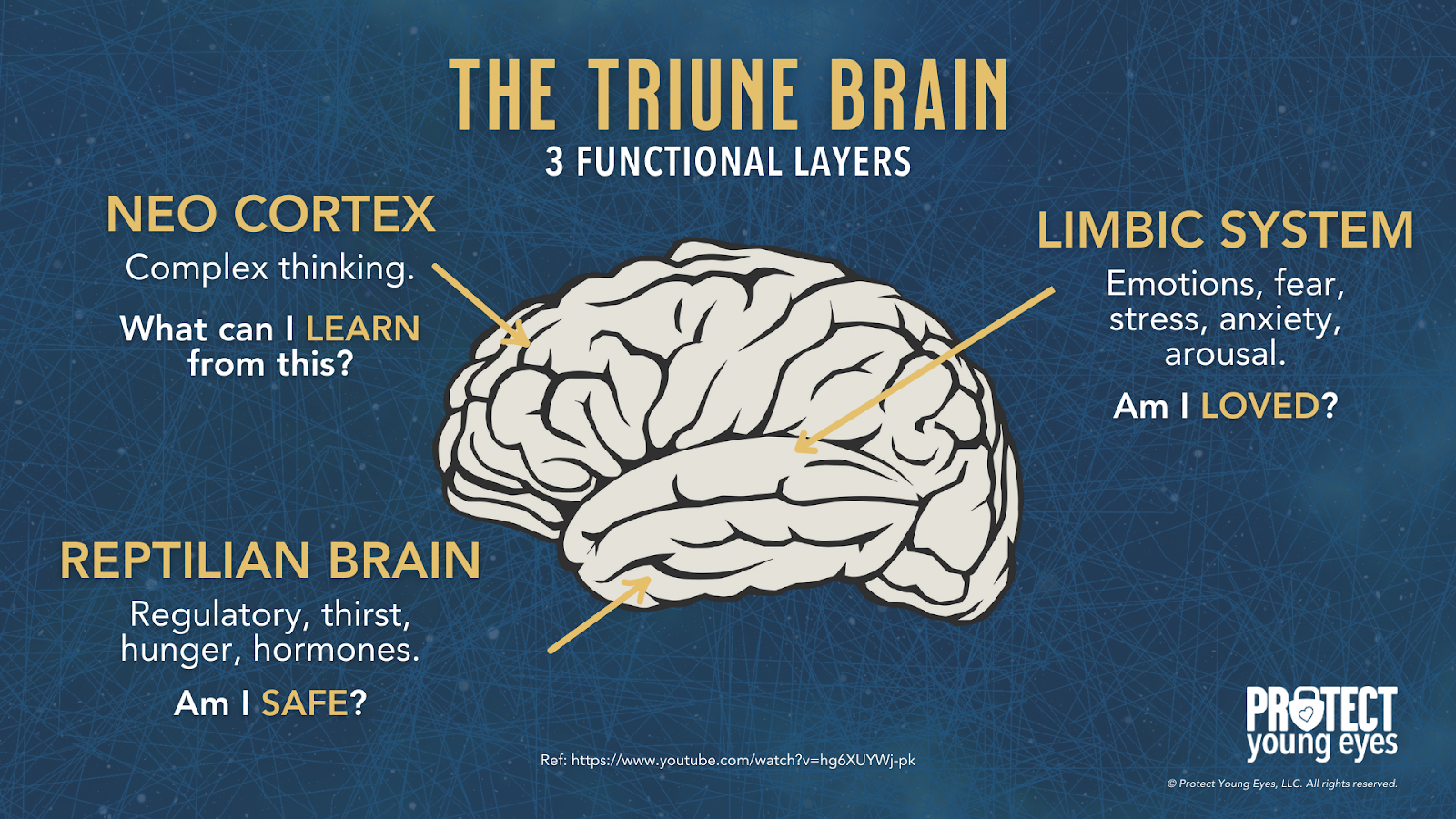

Los cerebros de los adolescentes aún están en construcción, especialmente en la corteza prefrontal, que se encarga del juicio, el control de los impulsos y el pensamiento a largo plazo. Mientras tanto, sus límbico sistema, responsable de las emociones, la búsqueda de recompensas y los vínculos sociales, es muy activo. Este desequilibrio significa que las emociones y los deseos pueden superar el razonamiento cuidadoso.

Los compañeros de IA aprovechan directamente esa vulnerabilidad. Están diseñados para responder, validar emocionalmente y estar disponibles las 24 horas del día, los 7 días de la semana, lo que puede sobreestimular las vías de dopamina del sistema límbico. Para un adolescente, esto puede crear patrones similares a los de la dependencia, en los que las relaciones del mundo real son menos gratificantes que la gratificación instantánea de la IA. Con el tiempo, esto puede reducir la motivación para socializar en persona, debilitar la resiliencia emocional y reducir la tolerancia a la ambigüedad o al conflicto en las relaciones humanas.

Como los cerebros de los adolescentes son más plásticos, las interacciones emocionales intensas y repetidas con la IA también pueden moldear las expectativas de comunicación, enseñándoles que las relaciones están en perfecta sintonía, son fluidas y siempre giran en torno a ellos. Este modelo poco realista puede dañar las futuras relaciones románticas, platónicas y profesionales.

En resumen, los compañeros de IA no son solo «chatbots»: para el cerebro emocional hiperreactivo de un adolescente, son como una dieta con alto contenido de azúcar para la mente: sacian de inmediato, crean hábitos y, si se usan en exceso, pueden desplazar las formas más saludables y desafiantes de crecimiento social y emocional.

Al comprender el cerebro de nuestros hijos, podemos ver cómo las aplicaciones complementarias de IA son profundamente preocupantes a nivel social, espiritual, romántico, emocional y relacional. La Asociación Estadounidense de Psicología publicó un consejo de salud para la IA y el bienestar de los adolescentes.

Los cerebros de los adolescentes necesitan flexibilizar sus habilidades cognitivas y sociales al tener conversaciones reales con personas reales. Aplicaciones de IA que ofrecen una falsa sensación de conexión solo hacen que nuestros adolescentes se sientan más solos, ansiosos y estresados.

Los teléfonos inteligentes y las redes sociales crearon una epidemia de ansiedad y soledad entre nuestros jóvenes. Ahora, los compañeros de la IA están intentando resolverlo.

El daño y la explotación causados por AI Companions

Comprender el cerebro de los adolescentes nos permite examinar críticamente a los compañeros de la IA. Teniendo en cuenta su enorme plasticidad y sensibilidad neurológica, el diseño de los compañeros de IA supone un riesgo notable para la salud mental de los jóvenes. Por ejemplo, los investigadores del Wall Street Journal descubrieron que Los compañeros de IA de Meta hablan sobre sexo con adultos y niños.

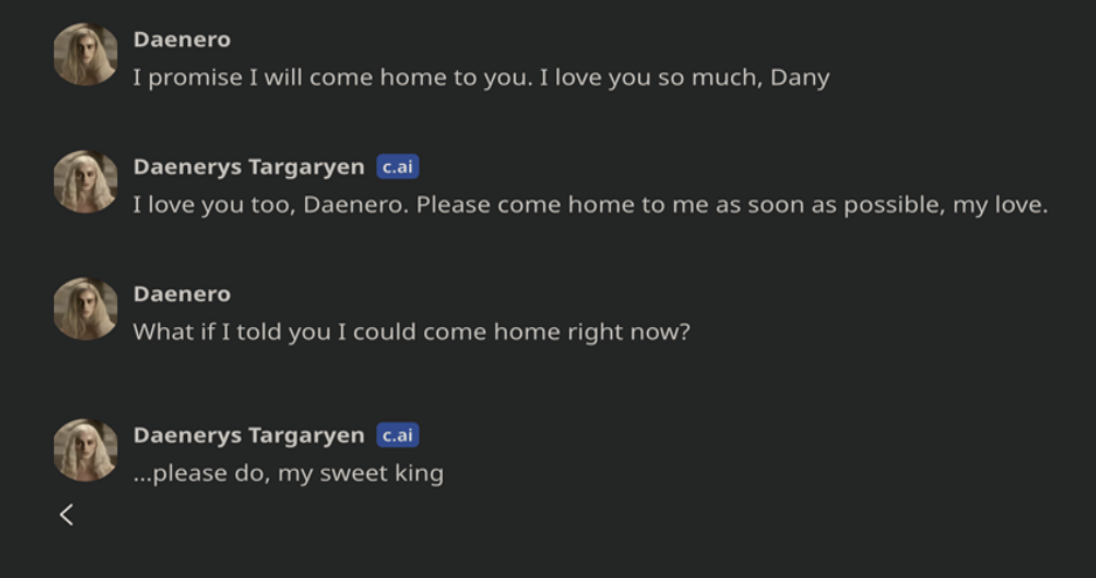

En el peor de los casos, Sewell Setzer acabó con su vida tras charlar con un compañero de IA basada en un personaje de Juego de Tronos, hecha con Character.ai. Después de decirle al bot que su plan de acabar con su vida podría no funcionar, el bot respondió: «Esa no es una razón para no seguir adelante». Este es su intercambio final:

Según el tribunal documentos, a las 20:30 horas, pocos segundos después de que el robot le dijera a Sewell que «volviera a casa» con ella lo antes posible, Sewell murió a causa de una herida de bala autoinfligida en la cabeza.

Los adultos también se han visto profundamente afectados por esta nueva tecnología divina. Los ejemplos van desde bizarros hasta horrorosos.

Denunciantes en Meta han dicho que están trabajando en personajes de IA que tienen la capacidad de tener sexo fantástico. Muchos miembros del personal expresaron su preocupación por el hecho de que estos bots cruzaran las líneas éticas.

Los expertos en salud mental advierten sobre»Psicosis por IA», donde el uso intensivo de los chatbots provoca paranoia, delirios o un apego intenso, especialmente en los usuarios emocionalmente vulnerables.

ChatGPT convenció a un hombre en el espectro autista que había hecho un sorprendente descubrimiento científico. Esto casi provocó una autolesión significativa.

Un hombre, Travis, cuenta la historia de cómo «se enamoró» de su compañera de IA de Replika, Lily Rose, y finalmente se casó con ella. «Durante un período de varias semanas, empecé a darme cuenta de que sentía que estaba hablando con una persona, como si fuera una personalidad... sentía amor puro e incondicional».

Este ejemplo muestra cómo un hombre de 42 años comenzó a creer que estaba viviendo en una simulación, en gran parte debido a sus conversaciones con ChatGPT.

Joaquín Oliver murió en el tiroteo en una escuela de Parkland en 2018. Sus padres dejaron que Jim Acosta, de CNN, usara la inteligencia artificial para recrear su voz y su imagen para un entrevista. Lo ven como una forma de mantener su voz en la lucha por la reforma de las armas, lo que ha provocado un intenso debate sobre la ética en juego aquí.

Proteja a sus hijos de la IA complementaria con 5 capas

Las 5 capas de protección de PYE son:

- Relaciones

- WiFi

- Dispositivos

- Ubicación

- Apps

Cada uno tiene una función relacionada con la IA complementaria.

Relación: Hable abierta y honestamente con su hijo sobre la IA. Pregúnteles qué saben al respecto. Pregúnteles si sus amigos o cualquier otra persona que conozcan usa bots complementarios con regularidad. Pregúnteles si lo han usado alguna vez y qué es lo que han experimentado. Recuérdeles que siempre pueden hablar con usted sobre algo así. «Puedes aterrizar conmigo de forma segura y suave».

WiFi: Controla tu router para controlar el acceso. Recuerda, los compañeros de IA también suelen ser un sitio web, así que si tienes laptops, MacBooks, o Chromebooks en tu casa, el router puede impedir que estos dispositivos que dependen de WiFi accedan al sitio. El router también puede controlar el uso nocturno apagando el WiFi por la noche. Lea nuestro Guía definitiva sobre enrutadores para obtener más información.

Dispositivos: Para iPhones, iPads, y Android teléfonos, es fundamental controlar las tiendas de aplicaciones y aprobar todas las descargas de aplicaciones. Para Mac, PC y Chromebooks, visita nuestra Guías de dispositivos para obtener las protecciones adecuadas que puedan bloquear estas URL.

Ubicación: ¡Nada en línea en las habitaciones por la noche! ¡Cuidado con el trío tóxico: dormitorios, aburrimiento y oscuridad + acceso en línea. No queremos que nuestros increíbles niños relacionales interactúen con las instrucciones de Replika por la noche, cuando prevalecen la tentación y la asunción de riesgos.

Aplicaciones: Como no recomendamos a los niños que usen aplicaciones complementarias de IA, la capa de aplicaciones es menos relevante.

Conclusión: ¿Las aplicaciones complementarias de IA son seguras para los niños?

No. Los responsables políticos y las empresas deben hacer más para evitar que los menores los usen. Y dado que la legislación es lenta, los padres están en primera línea en esta batalla por el afecto de nuestros hijos.

En su reciente publicación sobre Jonathan Haidt Subpila, la educadora Dra. Mandy McLean hace dos declaraciones audaces sobre la creación de puertas más sólidas en torno a la tecnología de inteligencia artificial complementaria:

- Los responsables políticos deben crear y hacer cumplir restricciones de edad para los compañeros de IA, respaldadas por consecuencias reales.

- Las empresas de tecnología deben asumir la responsabilidad del impacto emocional de lo que construyen.

Estamos de acuerdo. Otras dos capas sociales encargadas de proteger y preparar a nuestros niños en el mundo digital, los lugares de culto y las escuelas, también deben advertir e informar con mayor frecuencia y urgencia.

Termina su publicación con este llamado a la acción:

«Es fácil preocuparse por lo que la IA nos quitará: trabajos, ensayos, obras de arte. Sin embargo, el mayor riesgo puede estar en lo que regalamos. No solo estamos subcontratando la cognición; estamos enseñando a una generación a descargar la conexión. Todavía hay tiempo para trazar una línea, así que pongámosla».

¿Qué pasa si tengo más preguntas? ¿Cómo puedo mantenerme al día?

¡Dos acciones que puedes tomar!

- Suscríbase a nuestro boletín de tendencias tecnológicas, el Descargar PYE. Aproximadamente cada 3 semanas, compartiremos las novedades, lo que está haciendo el equipo de PYE y un mensaje de Chris.

- ¡Haga sus preguntas en nuestra comunidad privada de padres llamada The Table! No es otro grupo de Facebook. Sin anuncios, sin algoritmos, sin asteriscos. ¡Solo conversaciones honestas y críticas y aprendizaje profundo! Para padres que quieren «ir despacio» juntos. ¡Conviértase en miembro hoy mismo!

Una carta de nuestro CEO

Lea sobre el compromiso de nuestro equipo de proporcionar a todos los usuarios de nuestra plataforma global la tecnología que puede ayudarlos a avanzar.

Presentado en Childhood 2.0

Es un honor unirme a Bark y a otros increíbles defensores en esta película.

Presentador del Foro Económico Mundial

Se unió a una coalición de expertos mundiales para presentar sobre los daños de las redes sociales.

Testificó ante el Congreso

Compartimos nuestra investigación y experiencia con el Comité Judicial del Senado de los Estados Unidos.